As deepfakes, AI-generated simulations of real people, become increasingly sophisticated, the ability to distinguish truth from fiction online becomes ever more challenging. This growing threat to online security and trust has prompted Ethereum co-founder, Vitalik Buterin, to propose a novel defense mechanism: personalized security questions.

Explaining the vulnerability of traditional security measures like passwords and generic security questions to evolving deepfakes, Buterin highlights that his proposal relies on something artificial intelligence hasn’t fully mastered yet—the richness of human connection.

Ethereum Co-Founder’s Genius Hack To Outsmart Deepfakes

Instead of relying on easily guessable information like a pet’s name or mother’s maiden name, Buterin’s system would utilize questions based on shared experiences and unique details specific to the individuals interacting. Imagine recalling that inside joke from college or that obscure nickname your grandma gave you as a child – these personalized details would form a kind of memory maze, challenging imposters attempting to mimic someone.

According to Buterin, the strength of this approach lies in focusing on areas unlikely to be found online or easily guessed. By tapping into the wellspring of shared history and personal details that exist between individuals, it creates a potent defense against impersonation and fraud.

However, remembering the minutiae of our past isn’t always easy. Buterin acknowledges the potential for memory hiccups, but sees them as another layer of defense. The very act of recalling these obscure details adds a complexity that further deters imposters who wouldn’t have access to such personal information.

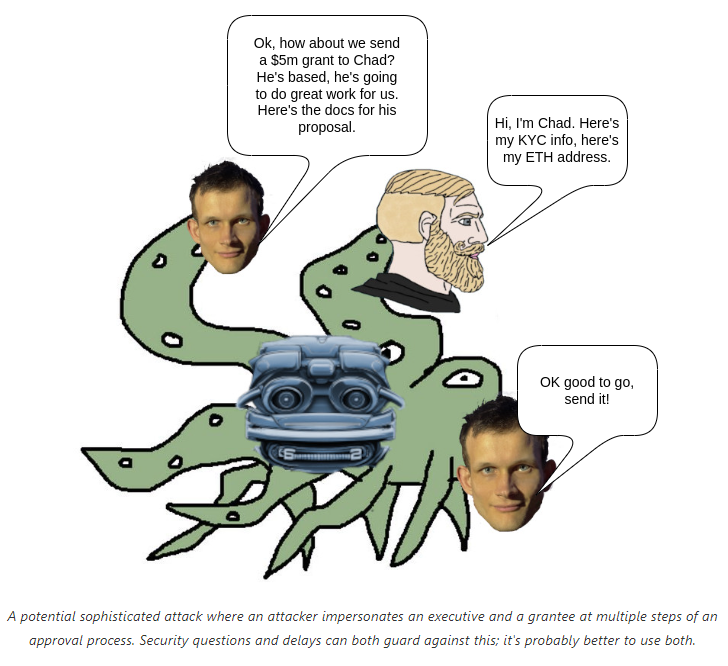

Recognizing the need for a multi-pronged approach, Buterin doesn’t stop at personalized questions. He envisions a layered security system incorporating elements like pre-agreed code words, subtle duress signals, and even confirmation delays for critical ethereum transactions. Imagine each layer as a barrier, making it exponentially harder for attackers to gain access.

Deepfake Threat Spurs Urgent Solutions Inquiry

This proposal arrives at a critical time. A recent report exposed yet another deepfake attempt targeting Buterin, highlighting the urgent need for effective solutions. While experts applaud the originality and potential of his approach, questions remain.

We have seen deepfake of @VitalikButerin used to promote a wallet drainer

The scam site is strnetclaim[.]cc

Still of the video can be seen below pic.twitter.com/R8AY5CVOea

— CertiK Alert (@CertiKAlert) February 7, 2024

Challenges like securely storing these personalized questions resurface when considering their implementation. Can they be encrypted and accessed securely, without becoming vulnerable targets themselves? Scalability also raises concerns. While effective within close-knit groups or individuals with deep shared experiences, how would this method function in broader contexts or online interactions with strangers?

Furthermore, questions arise about accessibility. Could an over-reliance on memory or specific shared experiences create barriers for certain demographics or individuals who might not possess the same level of detail? Finally, with AI constantly evolving, future-proofing becomes crucial. Could sophisticated AI eventually learn to manipulate or access these memories, rendering the questions ineffective?

Only time will tell if Ethereum boss Buterin’s memory maze can outsmart the deepfakes, but one thing’s for sure: this ingenious proposal has sparked a crucial conversation about safeguarding our digital selves. In a world where even reality itself is under attack, leveraging the intricacies of human memory might just be the next frontier in the fight against sophisticated online impersonation.

Featured image from Adobe Stock, chart from TradingView